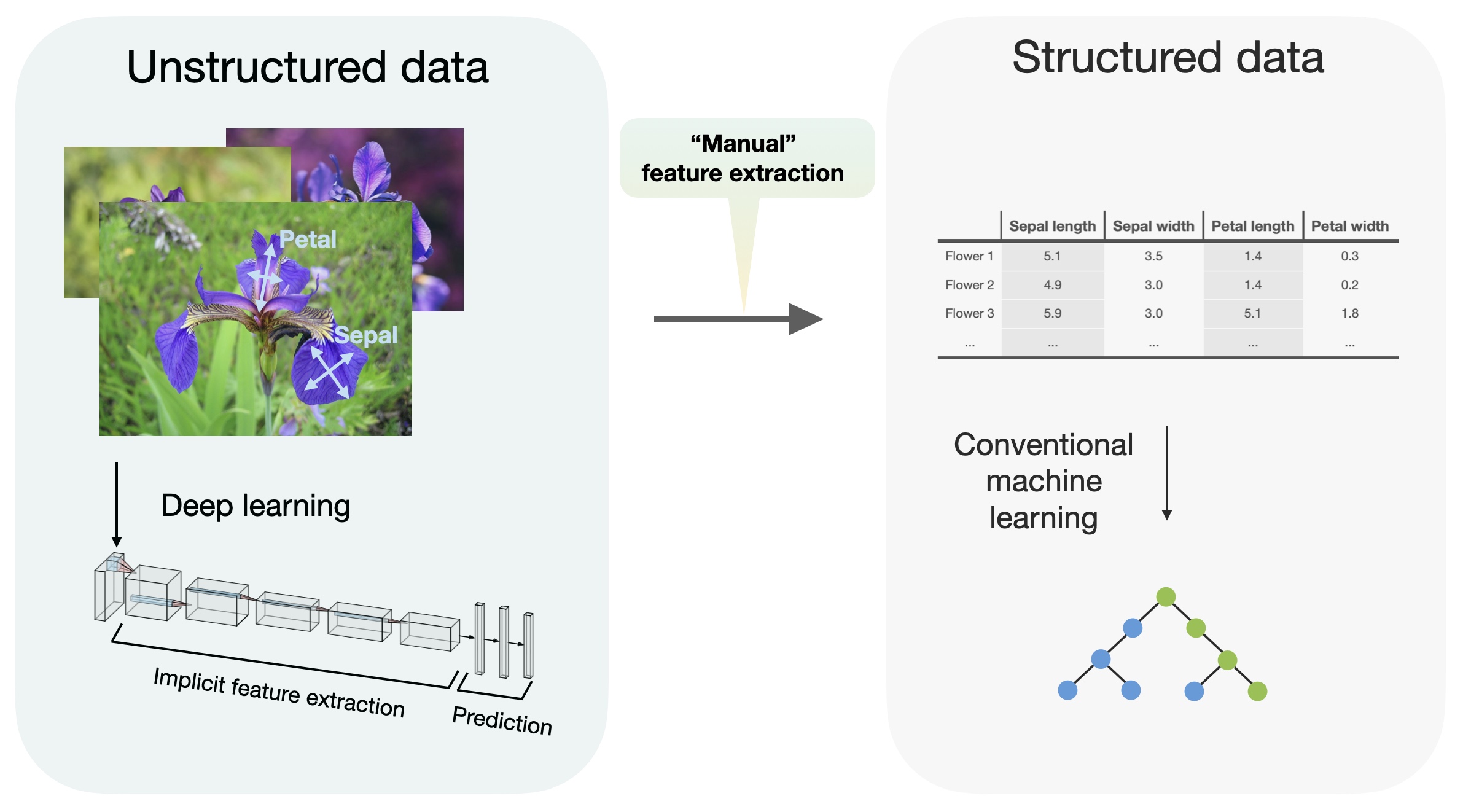

Second, we investigate the relative performance of DL models to each other. For instance, GBDT is superior for data with heterogeneous features, but lags behind DL solutions on non-heterogeneous data and poorly scales to classification problems when the number of classes is large (1K+). First, we do not observe a definitive superiority of GBDT and DL models w.r.t. each other, and their relative performance largely depends on the particular task. Our study has revealed several curious findings. To this end, we perform a large-scale evaluation of many recent open-sourced DL models on a wide range of datasets with the same protocol of hyperparameter tuning. Given the increasing number of works on tabular DL, we believe it is timely to review the recent developments from the field to identify more justified conclusions that can serve as a basis for future studies. For these reasons, a large number of DL solutions were recently proposed, and new models continue to emerge (Klambauer et al., 2017 Popov et al., 2020 Arik and Pfister, 2020 Song et al., 2019 Wang et al., 2017, 2020a Badirli et al., 2020 Hazimeh et al., 2020 Huang et al., 2020a). Such pipelines can then be trained end-to-end by gradient optimization for both structured and unstructured modalities. Along with potentially higher performance, using deep learning for tabular data is appealing as it would allow to construct multi-modal pipelines for problems, where only one part of the input is structured, and other parts include images, audio and other DL-friendly data. In these problems, data points are represented as vectors of heterogeneous features, which is typical for industrial applications and ML competitions, where non-deep models, e.g., GBDT (Chen and Guestrin, 2016 Prokhorenkova et al., 2018 Ke et al., 2017) are currently the top-choice solutions. 1 Introductionĭue to the tremendous success of deep learning for unstructured data like raw images, audio and texts (Goodfellow et al., 2016), there has been a lot of research interest to extend this success to problems with structured data stored in tabular form.

#REVISITING DEEP LEARNING MODELS FOR TABULAR DATA CODE#

The source code is available at \repository.

Finally, we design a simple adaptation of the Transformer architecture for tabular data that becomes a new strong DL baseline and reduces the gap between GBDT and DL models on datasets where GBDT dominates.

Second, we demonstrate that a simple ResNet-like architecture is a surprisingly effective baseline, which outperforms most of the sophisticated models from the DL literature. First, we show that the choice between GBDT and DL models highly depends on data and there is still no universally superior solution. We carefully tune and evaluate them on a wide range of datasets and reveal two significant findings. In this work, we start from a thorough review of the main families of DL models recently developed for tabular data.

Moreover, the models are often not compared to each other, therefore, it is challenging to identify the best deep model for practitioners. However, since existing works often use different benchmarks and tuning protocols, it is unclear if the proposed models universally outperform GBDT. The recent literature on tabular DL proposes several deep architectures reported to be superior to traditional “shallow” models like Gradient Boosted Decision Trees. The necessity of deep learning for tabular data is still an unanswered question addressed by a large number of research efforts.

0 kommentar(er)

0 kommentar(er)